Once you have installed the Solaris cluster on Solaris 10 nodes, you can start configuring the Solaris cluster according to the requirement . If you are planning for two node cluster, then you need two Solaris 10 hosts with 3 NIC cards and shared storage .You have to provide two dedicated NIC for cluster heartbeat.Also you need to setup up root – password less authentication between two Solaris nodes to configure the cluster. Here we will see that how we can configure two node Solaris cluster.

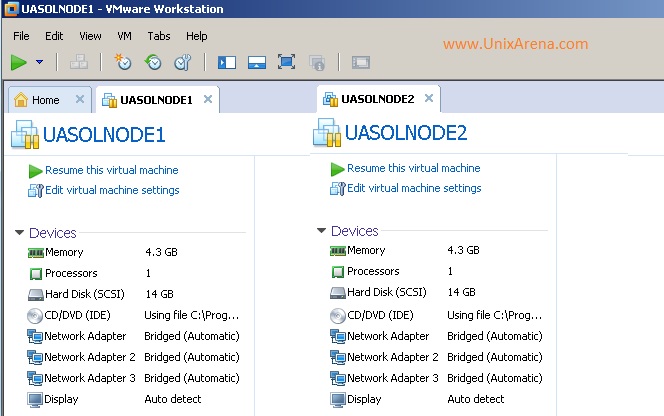

Solaris 10 Hosts :

UASOL1 – 192.168.2.90

UASOL2 – 192.168.2.91

Update both the nodes /etc/hosts file to resolve the host name. On UASOL1,

UASOL1:#cat /etc/hosts

#

# Internet host table

#

::1 localhost

127.0.0.1 localhost

192.168.2.90 UASOL1 loghost

192.168.2.91 UASOL2

On UASOL2,

UASOL2:#cat /etc/hosts

#

# Internet host table

#

::1 localhost

127.0.0.1 localhost

192.168.2.90 UASOL1

192.168.2.91 UASOL2 loghost

1. Login to one of the Solaris 10 node where you need to configure Solaris cluster.

2.Navigate to /usr/cluster/bin directory and execute scinstall cluster.Select 1 to create a new cluster .

login as: root

Using keyboard-interactive authentication.

Password:

Last login: Tue Jun 24 10:51:29 2014 from 192.168.2.3

Oracle Corporation SunOS 5.10 Generic Patch January 2005

UASOL1:#cd /usr/cluster/bin/

UASOL1:#./scinstall

*** Main Menu ***

Please select from one of the following (*) options:

* 1) Create a new cluster or add a cluster node

2) Configure a cluster to be JumpStarted from this install server

3) Manage a dual-partition upgrade

4) Upgrade this cluster node

* 5) Print release information for this cluster node

* ?) Help with menu options

* q) Quit

Option: 1

3.Again Select option 1 to create new cluster.

*** New Cluster and Cluster Node Menu ***

Please select from any one of the following options:

1) Create a new cluster

2) Create just the first node of a new cluster on this machine

3) Add this machine as a node in an existing cluster

?) Help with menu options

q) Return to the Main Menu

Option: 1

4.We have already setup the ssh password less authentication for root between two nodes. So we can continue.

*** Create a New Cluster ***

This option creates and configures a new cluster.

You must use the Oracle Solaris Cluster installation media to install

the Oracle Solaris Cluster framework software on each machine in the

new cluster before you select this option.

If the "remote configuration" option is unselected from the Oracle

Solaris Cluster installer when you install the Oracle Solaris Cluster

framework on any of the new nodes, then you must configure either the

remote shell (see rsh(1)) or the secure shell (see ssh(1)) before you

select this option. If rsh or ssh is used, you must enable root access

to all of the new member nodes from this node.

Press Control-D at any time to return to the Main Menu.

Do you want to continue (yes/no) [yes]?

5.Its better to go with custom mode of cluster configuration.

>>> Typical or Custom Mode <<<

This tool supports two modes of operation, Typical mode and Custom

mode. For most clusters, you can use Typical mode. However, you might

need to select the Custom mode option if not all of the Typical mode

defaults can be applied to your cluster.

For more information about the differences between Typical and Custom

modes, select the Help option from the menu.

Please select from one of the following options:

1) Typical

2) Custom

?) Help

q) Return to the Main Menu

Option [1]: 2

6.Enter the cluster name .

>>> Cluster Name <<<

Each cluster has a name assigned to it. The name can be made up of any

characters other than whitespace. Each cluster name should be unique

within the namespace of your enterprise.

What is the name of the cluster you want to establish? UACLS1

7.Enter the Solaris 10 nodes hostname which are going to participate on this cluster.

>>> Cluster Nodes <<<

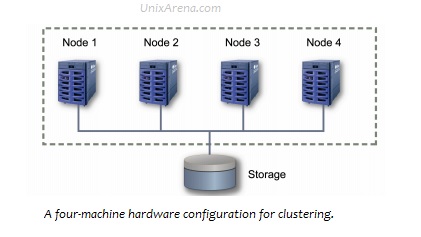

This Oracle Solaris Cluster release supports a total of up to 16

nodes.

List the names of the other nodes planned for the initial cluster

configuration. List one node name per line. When finished, type

Control-D:

Node name (Control-D to finish): UASOL1

Node name (Control-D to finish): UASOL2

Node name (Control-D to finish): ^D

This is the complete list of nodes:

UASOL1

UASOL2

Is it correct (yes/no) [yes]?

Attempting to contact "UASOL2" ... done

Searching for a remote configuration method ... done

The Oracle Solaris Cluster framework is able to complete the

configuration process without remote shell access.

8.I haven’t used DES authentication .

>>> Authenticating Requests to Add Nodes <<<

Once the first node establishes itself as a single node cluster, other

nodes attempting to add themselves to the cluster configuration must

be found on the list of nodes you just provided. You can modify this

list by using claccess(1CL) or other tools once the cluster has been

established.

By default, nodes are not securely authenticated as they attempt to

add themselves to the cluster configuration. This is generally

considered adequate, since nodes which are not physically connected to

the private cluster interconnect will never be able to actually join

the cluster. However, DES authentication is available. If DES

authentication is selected, you must configure all necessary

encryption keys before any node will be allowed to join the cluster

(see keyserv(1M), publickey(4)).

Do you need to use DES authentication (yes/no) [no]?

9. We have two dedicated physical NIC cards on both the solaris nodes.

>>> Minimum Number of Private Networks <<<

Each cluster is typically configured with at least two private

networks. Configuring a cluster with just one private interconnect

provides less availability and will require the cluster to spend more

time in automatic recovery if that private interconnect fails.

Should this cluster use at least two private networks (yes/no) [yes]?

10. In my setup, there is no switch in place to provide the system interconnect.

>>> Point-to-Point Cables <<<

The two nodes of a two-node cluster may use a directly-connected

interconnect. That is, no cluster switches are configured. However,

when there are greater than two nodes, this interactive form of

scinstall assumes that there will be exactly one switch for each

private network.

Does this two-node cluster use switches (yes/no) [yes]? no

11.Select the first network adapter for cluster heartbeat.

>>> Cluster Transport Adapters and Cables <<<

Transport adapters are the adapters that attach to the private cluster

interconnect.

Select the first cluster transport adapter:

1) e1000g1

2) e1000g2

3) Other

Option: 1

Adapter "e1000g1" is an Ethernet adapter.

Searching for any unexpected network traffic on "e1000g1" ... done

Unexpected network traffic was seen on "e1000g1".

"e1000g1" may be cabled to a public network.

Do you want to use "e1000g1" anyway (yes/no) [no]? yes

The "dlpi" transport type will be set for this cluster.

Name of adapter (physical or virtual) on "UASOL2" to which "e1000g1" is connected? e1000g1

12.Select the second cluster heartbeat network adapter name.

Select the second cluster transport adapter:

1) e1000g1

2) e1000g2

3) Other

Option: 2

Adapter "e1000g2" is an Ethernet adapter.

Searching for any unexpected network traffic on "e1000g2" ... done

Unexpected network traffic was seen on "e1000g2".

"e1000g2" may be cabled to a public network.

Do you want to use "e1000g2" anyway (yes/no) [no]? yes

The "dlpi" transport type will be set for this cluster.

Name of adapter (physical or virtual) on "UASOL2" to which "e1000g2" is connected? e1000g2

13.Let the cluster chooses network and subnet for Solaris cluster transport.

>>> Network Address for the Cluster Transport <<<

The cluster transport uses a default network address of 172.16.0.0. If this IP address is already in use elsewhere within your enterprise, specify another address from the range of recommended private addresses (see RFC 1918 for details). The default netmask is 255.255.240.0. You can select another netmask, as long as it minimally masks all bits that are given in the network address. The default private netmask and network address result in an IP address range that supports a cluster with a maximum of 32 nodes, 10 private networks, and 12 virtual clusters.

Is it okay to accept the default network address (yes/no) [yes]?

Is it okay to accept the default netmask (yes/no) [yes]?

Plumbing network address 172.16.0.0 on adapter e1000g1 >> NOT DUPLICATE ... done

Plumbing network address 172.16.0.0 on adapter e1000g2 >> NOT DUPLICATE ... done

14.Leave Fencing turned on.

>>> Set Global Fencing <<<

Fencing is a mechanism that a cluster uses to protect data integrity

when the cluster interconnect between nodes is lost. By default,

fencing is turned on for global fencing, and each disk uses the global

fencing setting. This screen allows you to turn off the global

fencing.

Most of the time, leave fencing turned on. However, turn off fencing

when at least one of the following conditions is true: 1) Your shared

storage devices, such as Serial Advanced Technology Attachment (SATA)

disks, do not support SCSI; 2) You want to allow systems outside your

cluster to access storage devices attached to your cluster; 3) Oracle

Corporation has not qualified the SCSI persistent group reservation

(PGR) support for your shared storage devices.

If you choose to turn off global fencing now, after your cluster

starts you can still use the cluster(1CL) command to turn on global

fencing.

Do you want to turn off global fencing (yes/no) [no]?

15.Resource security configuration can be tuned using clsetup command later.

>>> Resource Security Configuration <<<

The execution of a cluster resource is controlled by the setting of a

global cluster property called resource_security. When the cluster is

booted, this property is set to SECURE.

Resource methods such as Start and Validate always run as root. If

resource_security is set to SECURE and the resource method executable

file has non-root ownership or group or world write permissions,

execution of the resource method fails at run time and an error is

returned.

Resource types that declare the Application_user resource property

perform additional checks on the executable file ownership and

permissions of application programs. If the resource_security property

is set to SECURE and the application program executable is not owned

by root or by the configured Application_user of that resource, or the

executable has group or world write permissions, execution of the

application program fails at run time and an error is returned.

Resource types that declare the Application_user property execute

application programs according to the setting of the resource_security

cluster property. If resource_security is set to SECURE, the

application user will be the value of the Application_user resource

property; however, if there is no Application_user property, or it is

unset or empty, the application user will be the owner of the

application program executable file. The resource will attempt to

execute the application program as the application user; however a

non-root process cannot execute as root (regardless of property

settings and file ownership) and will execute programs as the

effective non-root user ID.

You can use the "clsetup" command to change the value of the

resource_security property after the cluster is running.

Press Enter to continue:

15.Disable automatic quorum device selection.

>>> Quorum Configuration <<<

Every two-node cluster requires at least one quorum device. By

default, scinstall selects and configures a shared disk quorum device

for you.

This screen allows you to disable the automatic selection and

configuration of a quorum device.

You have chosen to turn on the global fencing. If your shared storage

devices do not support SCSI, such as Serial Advanced Technology

Attachment (SATA) disks, or if your shared disks do not support

SCSI-2, you must disable this feature.

If you disable automatic quorum device selection now, or if you intend

to use a quorum device that is not a shared disk, you must instead use

clsetup(1M) to manually configure quorum once both nodes have joined

the cluster for the first time.

Do you want to disable automatic quorum device selection (yes/no) [no]? yes

16.Oracle Solaris cluster 3.3 u2 , automatically create a global filesystem on both the systems.

>>> Global Devices File System <<<

Each node in the cluster must have a local file system mounted on

/global/.devices/node@ before it can successfully participate

as a cluster member. Since the "nodeID" is not assigned until

scinstall is run, scinstall will set this up for you.

You must supply the name of either an already-mounted file system or a

raw disk partition which scinstall can use to create the global

devices file system. This file system or partition should be at least

512 MB in size.

Alternatively, you can use a loopback file (lofi), with a new file

system, and mount it on /global/.devices/node@.

If an already-mounted file system is used, the file system must be

empty. If a raw disk partition is used, a new file system will be

created for you.

If the lofi method is used, scinstall creates a new 100 MB file system

from a lofi device by using the file /.globaldevices. The lofi method

is typically preferred, since it does not require the allocation of a

dedicated disk slice.

The default is to use lofi.

For node "UASOL1",

Is it okay to use this default (yes/no) [yes]?

For node "UASOL2",

Is it okay to use this default (yes/no) [yes]?

17.Proceed with cluster creation. Do not interrupt cluster creation due to cluster check errors.

Is it okay to create the new cluster (yes/no) [yes]?

During the cluster creation process, cluster check is run on each of

the new cluster nodes. If cluster check detects problems, you can

either interrupt the process or check the log files after the cluster

has been established.

Interrupt cluster creation for cluster check errors (yes/no) [no]?

18.Once cluster configuration is completed , it reboots the other nodes and it reboots itself.

Cluster Creation

Log file - /var/cluster/logs/install/scinstall.log.1215

Started cluster check on "UASOL1".

Started cluster check on "UASOL2".

cluster check failed for "UASOL1".

cluster check failed for "UASOL2".

The cluster check command failed on both of the nodes.

Refer to the log file for details.

The name of the log file is /var/cluster/logs/install/scinstall.log.1215.

Configuring "UASOL2" ... done

Rebooting "UASOL2" ... done

Configuring "UASOL1" ... done

Rebooting "UASOL1" ...

Log file - /var/cluster/logs/install/scinstall.log.1215

Rebooting ...

19.Once the nodes are rebooted, you can see that both the nodes are booted in cluster mode and check the status using below command.

UASOL1:#clnode status

=== Cluster Nodes ===

--- Node Status ---

Node Name Status

--------- ------

UASOL2 Online

UASOL1 Online

UASOL1:#

20.You can see the loopback global-devices on both the systems.

UASOL1:#df -h |grep -i node

/dev/lofi/127 781M 5.4M 729M 1% /global/.devices/node@1

/dev/lofi/126 781M 5.4M 729M 1% /global/.devices/node@2

UASOL1:#lofiadm

Block Device File

/dev/lofi/126 /.globaldevices

UASOL1:#

21.You can also see that Solaris cluster has plumbed the new IP’s on both hosts .

UASOL1:#ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

e1000g0: flags=9000843<UP,BROADCAST,RUNNING,MULTICAST,IPv4,NOFAILOVER> mtu 1500 index 2

inet 192.168.2.90 netmask ffffff00 broadcast 192.168.2.255

groupname sc_ipmp0

ether 0:c:29:4f:bc:b8

e1000g1: flags=1008843<UP,BROADCAST,RUNNING,MULTICAST,PRIVATE,IPv4> mtu 1500 index 4

inet 172.16.0.66 netmask ffffffc0 broadcast 172.16.0.127

ether 0:c:29:4f:bc:c2

e1000g2: flags=1008843<UP,BROADCAST,RUNNING,MULTICAST,PRIVATE,IPv4> mtu 1500 index 3

inet 172.16.0.130 netmask ffffffc0 broadcast 172.16.0.191

ether 0:c:29:4f:bc:cc

clprivnet0: flags=1008843<UP,BROADCAST,RUNNING,MULTICAST,PRIVATE,IPv4> mtu 1500 index 5

inet 172.16.2.2 netmask ffffff00 broadcast 172.16.2.255

ether 0:0:0:0:0:2

UASOL1:#

22.As of now , we haven’t configured the quorum devices, but you can just see the voting status using below command.

UASOL1:#clq status

=== Cluster Quorum ===

--- Quorum Votes Summary from (latest node reconfiguration) ---

Needed Present Possible

------ ------- --------

1 1 1

--- Quorum Votes by Node (current status) ---

Node Name Present Possible Status

--------- ------- -------- ------

UASOL2 1 1 Online

UASOL1 0 0 Online

UASOL1:#

We have successfully configured oracle Solaris two node cluster on Solaris 10 update 11 X86 systems.

What’s Next ?

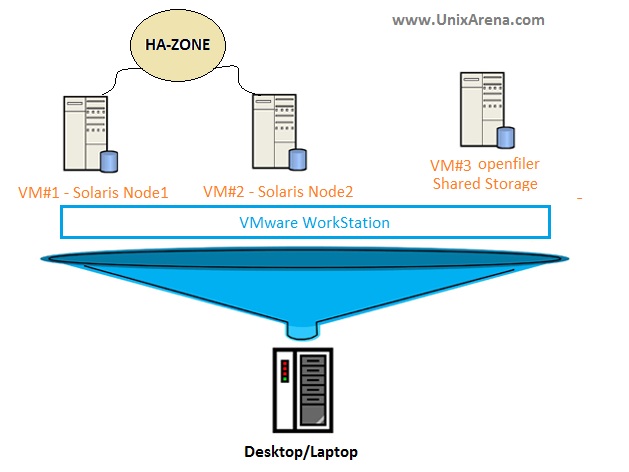

if you want to configure Solaris cluster on VMware workstation,refer this article.

Share it ! Comment it !! Be Sociable !!!

The post How to configure Solaris two node cluster on Solaris 10 ? appeared first on UnixArena.